# paperify

**Repository Path**: mirrors/paperify

## Basic Information

- **Project Name**: paperify

- **Description**: Paperify 可将任何文档、网页或电子书转化为研究论文

- **Primary Language**: Shell

- **License**: MIT

- **Default Branch**: master

- **Homepage**: https://www.oschina.net/p/paperify

- **GVP Project**: No

## Statistics

- **Stars**: 2

- **Forks**: 0

- **Created**: 2023-09-14

- **Last Updated**: 2026-02-07

## Categories & Tags

**Categories**: Uncategorized

**Tags**: None

## README

# Paperify

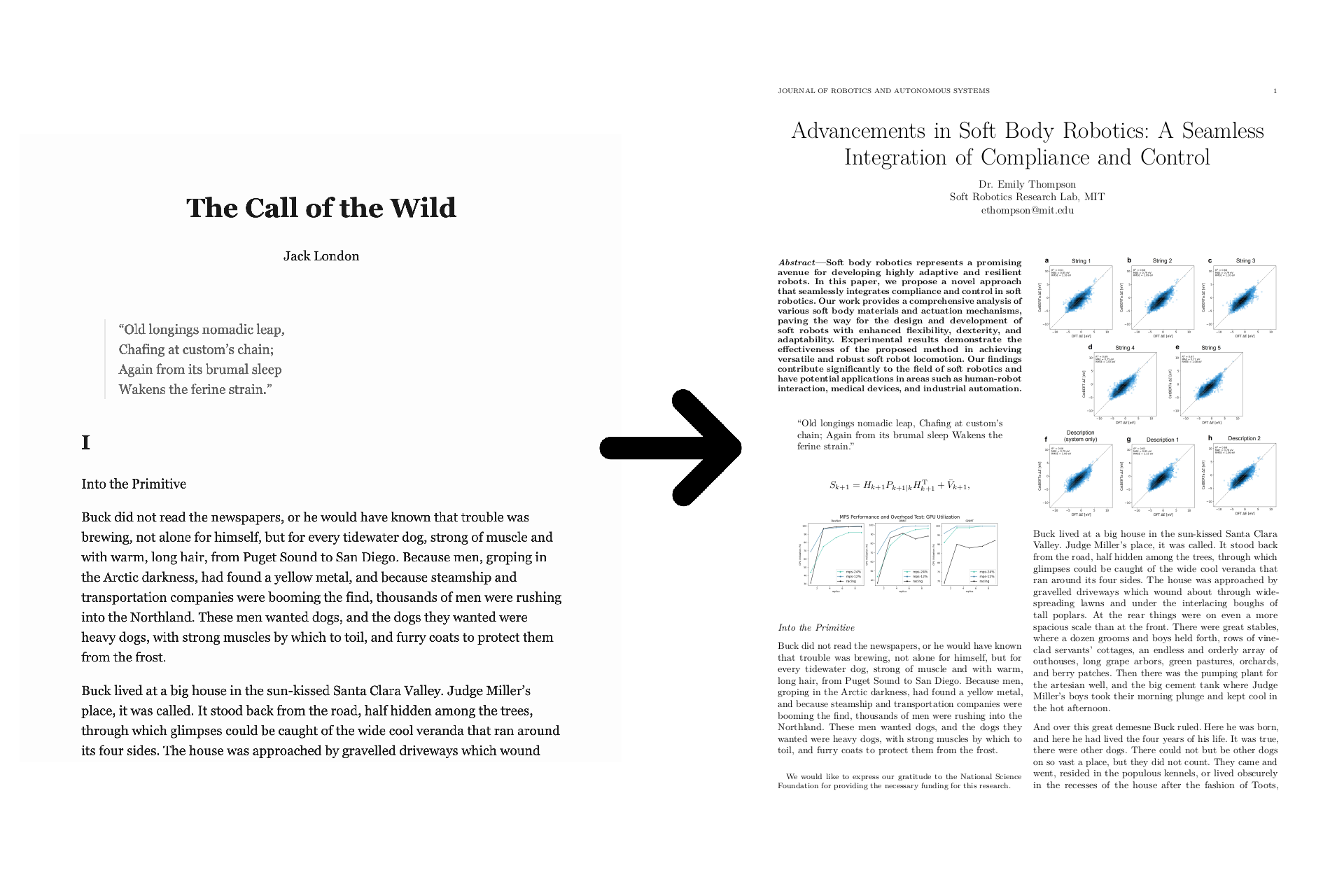

Paperify transforms any document, web page, or ebook into a research paper.

The text of the generated paper is the same as the text of the original

document, but figures and equations from real papers are interspersed

throughout.

A paper title and abstract are added (optionally generated by ChatGPT, if you

provide an API key), and the entire paper is compiled with the IEEE $\LaTeX$

template for added realism.

# Install

First, install the dependencies (or [use Docker](#docker)):

- curl

- Python 3

- Pandoc

- jq

- LaTeX (via TeXLive)

- ImageMagick (optional)

For example, on Debian-based systems (_e.g._, Debian, Ubuntu, Kali, WSL):

``` bash

sudo apt update

sudo apt install --no-install-recommends \

pandoc \

curl ca-certificates \

jq \

python3 \

imagemagick \

texlive texlive-publishers texlive-science lmodern texlive-latex-extra

```

Then, clone the repo (or directly pull the script), and execute it.

``` bash

curl -L https://github.com/jstrieb/paperify/raw/master/paperify.sh \

| sudo tee /usr/local/bin/paperify

sudo chmod +x /usr/local/bin/paperify

paperify -h

```

# Examples

- [`examples/cox.pdf`](examples/cox.pdf)

Convert [Russ Cox's transcript of Doug McIlroy's talk on the history of Bell

Labs](https://research.swtch.com/bell-labs) into a paper saved to the `/tmp/`

directory as `article.pdf`.

```

paperify \

--from-format html \

"https://research.swtch.com/bell-labs" \

/tmp/article.pdf

```

- [`examples/london.pdf`](examples/london.pdf)

Download figures and equations from the 1000 latest computer science papers

on `arXiv.org`. Intersperse the figures and equations into Jack London's

_Call of the Wild_ with a higher-than-default equation frequency. Use ChatGPT

to generate a paper title, author, abstract, and metadata for an imaginary

paper on soft body robotics. Save the file in the current directory as

`london.pdf`.

```

paperify \

--arxiv-category cs \

--num-papers 1000 \

--equation-frequency 18 \

--chatgpt-token "sk-[REDACTED]" \

--chatgpt-topic "soft body robotics" \

"https://standardebooks.org/ebooks/jack-london/the-call-of-the-wild/downloads/jack-london_the-call-of-the-wild.epub" \

london.pdf

```

## Docker

Alternatively, run Paperify from within a Docker container. To run the first

example from within Docker and build to `./build/cox.pdf`:

``` bash

docker run \

--rm \

-it \

--volume "$(pwd)/build":/root/build \

jstrieb/paperify \

--from-format html \

"https://research.swtch.com/bell-labs" \

build/cox.pdf

```

# Usage

```

usage: paperify [OPTIONS]